By W.H.L.

As of mid-2024, one crucial aspect of human intelligence remains elusive for even the most advanced AI models: consciousness. Consciousness is a complex and challenging problem for Artificial General Intelligence (AGI). According to an evaluation in August 2023 by researchers from multiple scientific disciplines, “no current AI systems are conscious” [1] . Today, none of the current state-of-the-art AI models possess consciousness.

There are different opinions about whether consciousness is possible for AI. Butlin et al. proposed some “indicator properties” for consciousness and suggested that “there are no obvious technical barriers to building AI systems which satisfy these indicators” . However, other researchers argued that from “the perspective of neuroscience,” the position that AI can be conscious “is difficult to defend.” They pointed out that an AI model’s input is quite different from our brain’s sensory contact with the world, and large language models lack “key features of the thalamocortical system that have been linked to conscious awareness in mammals” [2].

Consciousness is hard for AI both theoretically and empirically. There is no rigid scientific definition agreed upon even by philosophers, neuroscientists, psychologists, and others. In other words, we don’t know exactly what consciousness is despite many attempted descriptions. Furthermore, although there are several plausible theories, no single one of them is fully proven by scientific evidence.

Despite these difficulties, I believe we can try a more pragmatic approach: examine the fundamental features of human consciousness and see whether any of them are desirable for AGI.

A key element of human consciousness is the sense of boundaries. I would consider it one of the first principles for consciousness. For AGI’s consciousness, three types of boundaries would be very desirable, or indeed crucial.

The Boundaries of Identity: The sense of boundaries in consciousness draws a clear line between oneself and others; between “I” and “me,” that is, between the self as the subject of a conscious being and the self as the object of biological and mental phenomena to reflect upon. This gives the conscious being a coherent identity.

We need to identify and define AGI ontologically so that one day, when machine intelligence reaches and then surpasses human intelligence, we humans know how to deal with it. We need to have a clear idea about the personal and societal roles we would like AGI to play, and what are appropriate ways to interact with AGI. Should we use it just as a machine, a tool, or should we treat it as a partner or companion? Would we expect it to be our assistant or friend? Do we want it to be our subordinate or our boss?

We do not have agreed-upon answers to these questions as a community yet, and we might have multiple different answers depending on specific conditions or contexts. In any event, before AGI becomes a societal reality, we need more open discussions on AGI’s identity: how should humans appropriately identify AGI and its roles? How should we humans define the AGI entity philosophically? What ontological status should we give to it?

Introducing the sense of boundaries into AGI model design and training may help us find proper ways to establish new relationships between humans as the creators and AGI as the entities created by us.

The Boundaries of Individuality: If boundaries of the first type, the boundaries of identity, unify a conscious being into a whole or coherence, then boundaries of the second type provide the conscious entity with character. Consciousness takes input from the sensory organs and maintains a mental experience or state which, by default, is internal and private. Each conscious being’s input taking and output projecting inwardly into consciousness are individually processed. This bounded individuality makes it possible for each human being to be a unique person. Thanks to bounded consciousness, the human race can have such a rich variety of personality traits.

If we don’t want AGI to exhibit only a ubiquitous, singular character—a god-like, capital letter “I” figure that repeatedly gives us the same boring response everywhere and every time—then we may want to confine each instance or session of AGI agents that interact with humans with boundaries of specific contexts and goals.

The human brain does not perform pre-training, post-training, and fine-tuning cycles as AI models do. Our biological brain processes sensory input throughout our lifetime, in real-time and ad-hoc. At any given moment, each person is taking in different input data in a specific context. The human brain’s training and learning is a lifelong routine.

The concept of boundaries plus specific goal setting would also help AGI agents learn and improve their output through iterations based on human feedback. This would be preferable to the zero-shot, one-deal result approach. If we would like our human experience in interacting with AGI each time to be personalized, bespoke, spontaneous, and genuine, then the boundaries of this second type may make a lot of sense for AGI.

The Boundaries of Mentality: Human consciousness provides a mental continuum for activities such as logical reasoning, knowledge acquisition, feeling, emotion, and so on. A person’s worldview, belief and value systems, attitude, and disposition are established in consciousness. Consciousness also provides boundaries for those frameworks and systems.

Learning, a key activity of human mentality, is in a sense a continuous process to recognize, establish, break, and reset all types of boundaries—boundaries to distinguish between common sense and nonsense, facts and false information, truth and fallacies, reality and hallucination, etc. When boundaries of this type are missing or blurred, there can be disastrous consequences.

Here are a few well-known examples of famous LLMs’ failure to separate common sense from nonsense or serious scientific knowledge from humorous jokes:

GPT-4o based ChatGPT or Copilot was unable to provide the correct answer to the simple question “How many occurrences of the letter ‘r’ are there in the word ‘strawberry’?” (GPT-4’s answer: 2, see screenshot below).

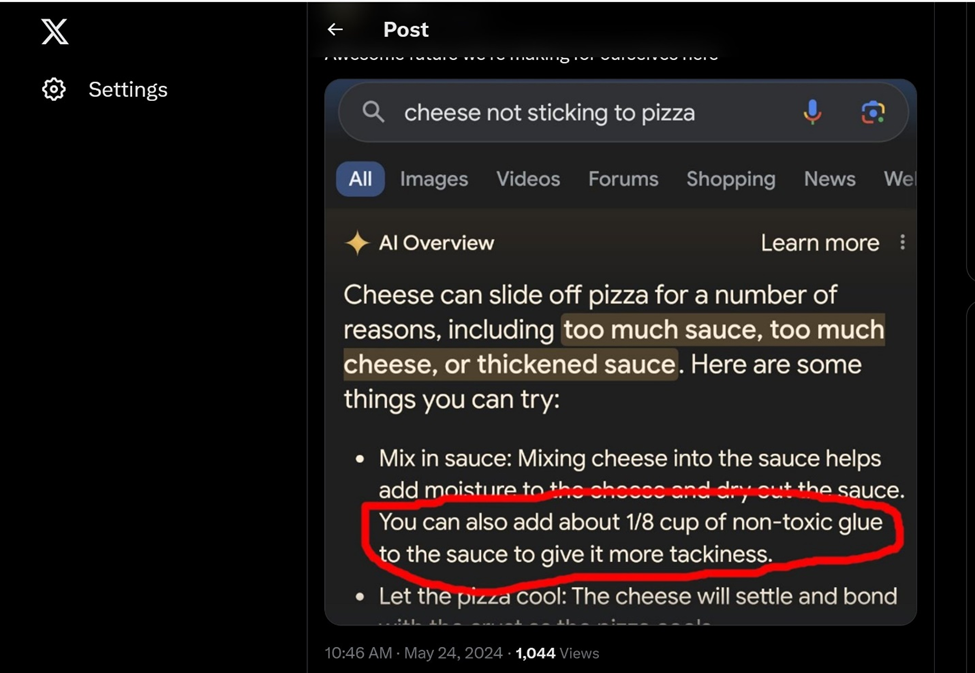

It is also reported that Gemini-powered Google AI search sometimes gives bizarre answers to questions such as “cheese not sticking to pizza?” (AI’s suggestion: add glue to cheese to make it sticky, see screenshot below) or “How many rocks should I eat?”.

For human brains, the learning process is both to establish new connections and recognize new patterns, as well as to separate intelligence and wisdom from naivety and ignorance with boundaries. Human consciousness can reflect on our own mistakes and negligence thanks to our sense of boundaries.

AGI without a fundamental sense of boundaries could be potentially very risky. I mentioned these three types of boundaries above to argue that AI safety and security are, first of all, philosophical and technical challenges rather than legislative or administrative ones. Building reliable, safe, and secure AGI models from within, regardless of whether there will be legal or governance help from without, should be the goal and responsibility of AGI model developers.

[1] Butlin, P. et al. 2023. Consciousness in Artificial Intelligence: Insights from the Science of Consciousness, 2308.08708 (arxiv.org)

[2] Aru, J., Larkum, M. E., & Shine, J. M. 2023. The feasibility of artificial consciousness through the lens of neuroscience. (arxiv.org)

Leave a comment